Parabolic dynamics

1. Survey

1.1. What does hyperbolic, elliptic mean in dynamics?

Let  be a dynamical system. Even if deterministic, it can exhibit a chaotic behaviour. This has several characteristics. One of them is the Butterfly effect: sensitive dependence on initial conditions (SDIC).

be a dynamical system. Even if deterministic, it can exhibit a chaotic behaviour. This has several characteristics. One of them is the Butterfly effect: sensitive dependence on initial conditions (SDIC).

Definition 1 A flow  has SDIC if there exists

has SDIC if there exists  such that

such that  ,

,  ,

,  such that

such that  and

and  such that

such that  .

.

A quantitative measurement of SDIC is provided by the dependence of  on

on  .

.

Definition 2 Let  be a nondecreasing function. A flow

be a nondecreasing function. A flow  has SDIC of order

has SDIC of order  if in the above definition, one can take

if in the above definition, one can take  such that

such that  .

.

This leads us to a rough division of dynamical systems:

- Elliptic: no SDIC, or if any, subpolynomial.

- Hyperbolic: SDIC is fast, exponential.

- Parabolic: SDIC is slow, subexponential.

This trichotomy is advertised in Katok-Hasselblatt’s book.

For instance, entropy is a measure of chaos. Elliptic or parabolic dynamical systems have zero entropy. Hyperbolic dynamical systems have positive entropy.

1.2. Examples of elliptic dynamical systems

- Circle diffeomorphisms.

- Linear flows on the torus (these two examples are related, one is the suspension of the other).

- Billiards in convex domains. Usually, there are many periodic orbits, trapping regions, caustics.

This the realm of Hamiltonian dynamics and KAM theory.

1.3. Examples of hyperbolic dynamical systems

- Automorphisms of a torus which are Anosov, i.e. all eigenvalues have absolute values

. Then orbits diverge exponentially: if

. Then orbits diverge exponentially: if  ,

,  , set

, set  . Then

. Then  mod

mod  and

and  reaches

reaches  in time

in time  such that

such that  for

for  , an exponential.

, an exponential. - Geodesic flows on constant curvature surfaces.

- Sinai’s billiard: a rectangle with a circular obstacle. Somewhat equivalent to the motion of two hard spheres on a torus. Scattering occurs after hitting the obstacle, due to its strict convexity.

This is the realm of Anosov-Sinai and others’ dynamics. Structural stability occurs: hyperbolic systems form an open set.

1.4. Examples of parabolic dynamical systems

- Horocycle flows on constant curvature surfaces. They were introduced by Hedlund, followed by Dani, Furstenberg, Marcus, Ratner.

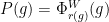

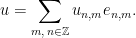

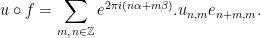

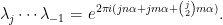

- Nilflows on nilmanifolds. If

is a cocompact lattive in a nilpotent Lie group, let

is a cocompact lattive in a nilpotent Lie group, let  be a

be a  -parameter subgroup of

-parameter subgroup of  acting by right translations on

acting by right translations on  . It descends to

. It descends to  . Both examples are algebraic, this provides us with tools to study them. They are a bit too special to illustrate parabolic dynamics.

. Both examples are algebraic, this provides us with tools to study them. They are a bit too special to illustrate parabolic dynamics. - Smooth area-preserving flows on higher genus surfaces.

- Ehrenfest’s billiard: rectangular, with a rectangular obstacle. Here, SDIC is only caused by discontinuities due to corners. More generally, billiards in rational polygons (angles belong to

), or equivalently linear flows on translation surfaces. This field is known as Teichmüller dynamics. Forni considers them as elliptic systems with singularities, I prefer to stress their parabolic character.

), or equivalently linear flows on translation surfaces. This field is known as Teichmüller dynamics. Forni considers them as elliptic systems with singularities, I prefer to stress their parabolic character.

1.5. Uniformity

Within hyperbolic dynamics, there is a subdivision in uniformly hyperbolic, nonuniformly hyperbolic and partially hyperbolic.

In the same manner, we see horocycle flows as uniformly parabolic, and nilflows as partially parabolic, with both elliptic and parabolic directions. Area-preserving flows on surfaces have fixed points which introduce partially parabolic behaviour: shearing is uniform or not. However, there is no formal definition.

1.6. More examples of parabolic behaviours

Parabolicity is not stable. However, Ravotti has discovered a  -parameter perturbation of unipotent flows in

-parameter perturbation of unipotent flows in  .

.

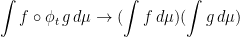

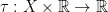

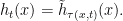

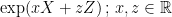

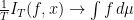

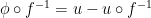

A flow  is a time-change of a given flow

is a time-change of a given flow  if there exists a function

if there exists a function  such that

such that

For  to be a flow, it is necessary that

to be a flow, it is necessary that  be a cocycle.

be a cocycle.

Both flows have the same trajectories. A feature of parabolic dynamics is that a typical time-change  is not isomorphic to

is not isomorphic to  and has new chaotic features. Indeed, an isomorphism would solve the cohomology equation, and there are obstructions.

and has new chaotic features. Indeed, an isomorphism would solve the cohomology equation, and there are obstructions.

1.7. Program

Study smooth time-changes of algebraic flows.

Goes back to Marcus in the 1970’s. Algebraic tools break down, softer methods are required: geometric mechanisms. Also, we expect the features exhibited by time changes to be more typical.

2. Chaotic properties

2.1. Definitions

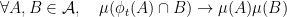

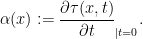

Definition 3 Let  be a measure space with finite measure. Let

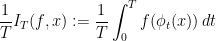

be a measure space with finite measure. Let  be a measure preserving flow. The trajectory of a point

be a measure preserving flow. The trajectory of a point  is equidistributed with respect to

is equidistributed with respect to  if for every smooth observable

if for every smooth observable  ,

,

tends to

tends to  as

as  tends to

tends to  .

.

is ergodic if

is ergodic if  almost every

almost every  has equidistributed orbit with respect to

has equidistributed orbit with respect to  .

.

This is Boltzmann hypothesis.

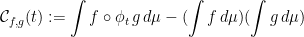

Definition 4 Say  is mixing if for all

is mixing if for all  , the correlation

, the correlation

tends to

tends to  as

as  tends to

tends to  .

.

This means decorrelation of functions. This implies ergodicity.

The speed at which decorrelation occurs is a significative feature too.

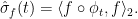

Definition 5 The speed of mixing is a function  such that for all smooth observables

such that for all smooth observables  , the correlation decays at speed

, the correlation decays at speed  , i.e.

, i.e.

as

as  tends to

tends to  .

.

2.2. Relation to the trichotomy

Elliptic systems often are not ergodic, but even when they are, they are not mixing.

Hyperbolic and parabolic systems can be mixing, but at different speeds:

- hyperbolic

exponential decay of correlations.

exponential decay of correlations. - in parabolic systems, we expect that, if mixing occurs, the decay of correlations is slower: polynomial or subpolynomial.

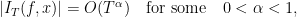

A related concept is that of polynomial deviations of ergodic averages: if  is smooth and has vanishing integral,

is smooth and has vanishing integral,  s ergodic and

s ergodic and  is an equidistribution point,

is an equidistribution point,

and no faster. This phenomenon was first discovered on horocycle flows, then Teichmüller flows (Zorich, experimentally, Kontsevitch-Zorich for a proof).

2.3. Other features

Spectral properties. Let  be the operator

be the operator  on

on  . Then

. Then  is unitary. What is its spectrum?

is unitary. What is its spectrum?

Disjointness of rescalings. Rescaling means linear time change  .

.

3. Results

Horocycle flow is mixing (Ratner). The spectrum is Lebesgue absolutely continuous.

Time changes of horocycle flows are mixing (Marcus, by shearing). This can be made quantitative (Forni-Ulcigrai).

Disjointness of rescalings fails for horocycle flows, but hold for nontrivial time changes (Kanigowski-Ulcigrai and Flaminio-Forni). The spectrum is Lebesgue absolutely continuous as well.

Nilflows themselves are not mixing, but typical time changes of nilflows are mixing (Avila-Forni-Ravotti-Ulcigrai).

We shall see geometric mechanisms at work:

- Mixing via shearing.

- Ratner property of shearing.

- Renormizable parabolic flows.

- Deviations of ergodic integrals.

4. Horocycle flows

Today’s goal is to explain the technique of mixing by shearing.

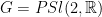

4.1. Algebraic viewpoint

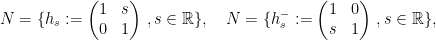

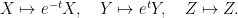

Consider  and its subgroups

and its subgroups

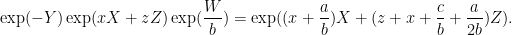

Every matrix can be uniquely written  , hence

, hence  . The factors do not commute, because of the key relation

. The factors do not commute, because of the key relation

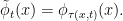

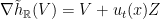

The key relation can be interpreted as a selfsimilarity property:  is a fixed point of renormalization by

is a fixed point of renormalization by  .

.

Take a discrete and cocompact subgroup  . Then

. Then  and

and  act on

act on  by left multiplication. The key relation implies that the rescaled flow $latex {h_{\mathbb R}^k=(h_{ks})_{s\in{\mathbb R}}}&fg=000000$ is conjugated to $latex {h_{\mathbb R}}&fg=000000$. This fails for other (nonrescaling) time changes, as I proved recently with Fraczek and Kanigowski.

by left multiplication. The key relation implies that the rescaled flow $latex {h_{\mathbb R}^k=(h_{ks})_{s\in{\mathbb R}}}&fg=000000$ is conjugated to $latex {h_{\mathbb R}}&fg=000000$. This fails for other (nonrescaling) time changes, as I proved recently with Fraczek and Kanigowski.

4.2. Geometric viewpoint

Let  denote the upper half plane, with metric

denote the upper half plane, with metric  .

.

Fact.  acts isometrically and transitively on

acts isometrically and transitively on  , and this yields a diffeomorphism of

, and this yields a diffeomorphism of  with the unit tangent bundle

with the unit tangent bundle  .

.

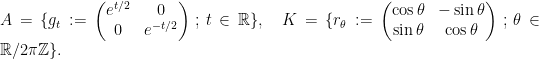

Indeed, the action is by Möbius transformations

on  , and by their derivatives on

, and by their derivatives on  .

.

With this identification, orbits of  are curves which project to geodesics of

are curves which project to geodesics of  , and coincide with their lifts by their unit speed vector. On the other hand, orbits of

, and coincide with their lifts by their unit speed vector. On the other hand, orbits of  are curves which projects to horocycles of

are curves which projects to horocycles of  , and coincide with their lifts by their unit normal outward pointing vectors. Lifts by inward pointing normal vectors are orbits of

, and coincide with their lifts by their unit normal outward pointing vectors. Lifts by inward pointing normal vectors are orbits of  .

.

is a hyperbolic flow: it contracts in the direction of

is a hyperbolic flow: it contracts in the direction of  -orbits, it dilates in the direction of

-orbits, it dilates in the direction of  -orbits,

-orbits,

4.3. Classical results

Let  denote Haar measure on

denote Haar measure on  . It maps via

. It maps via  to hyperbolic volume. Consider the induced measure on

to hyperbolic volume. Consider the induced measure on  (still denoted by

(still denoted by  ). Then

). Then  and

and  acts on

acts on  by measure preserving transformations.

by measure preserving transformations.

Then

is ergodic (Hopf). It is far from being unique ergodic (plenty of periodic orbits).

is ergodic (Hopf). It is far from being unique ergodic (plenty of periodic orbits). is mixing.

is mixing. is uniquely ergodic (Furstenberg). This means that every orbit is equidistributed with respect to

is uniquely ergodic (Furstenberg). This means that every orbit is equidistributed with respect to  .

.

5. Shearing

This is an alternate way to prove mixing. The idea goes back to Marcus (Annals of Math. 1977). Marcus covered a more general situation, and proved mixing of all orders (i.e. for multicorrelations, integrals involving an arbitrarily large number of functions).

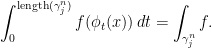

Let us shift viewpoint on the key relation. Let  be a piece of

be a piece of  -orbit of length

-orbit of length  . Let

. Let

Then  is sheared or tilted in the direction of the geodesic flow.

is sheared or tilted in the direction of the geodesic flow.

Key idea in parabolic dynamics: In several parabolic systems, the Butterfly effect happens in a special way, e.g. shearing. Points nearby move parallel, but with different speeds. This implies that transverse arcs shear.

5.1. Recipe for mixing in parabolic dynamics

Here are the ingredients:

- A uniquely ergodic flow

.

. - A transverse direction which is sheared in the direction of the flow.

By assumption, for every  , the trajectory

, the trajectory  equidistributes with respect to

equidistributes with respect to  . We want to upgrade it to mixing, which is a property of sets: indeed

. We want to upgrade it to mixing, which is a property of sets: indeed

is equivalent to

i.e  equidistributes as

equidistributes as  .

.

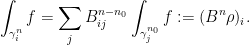

The idea is to cover  by short arcs in the transverse direction. We prove that each such arc equidistributes, and apply Fubini. I.e. if

by short arcs in the transverse direction. We prove that each such arc equidistributes, and apply Fubini. I.e. if  , apply

, apply  . Then

. Then  .

.

Each  becomes close to a long piece of orbit of

becomes close to a long piece of orbit of  . By unique ergodicity, that piece equidistributes, and this implies equidistribution for

. By unique ergodicity, that piece equidistributes, and this implies equidistribution for  .

.

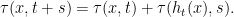

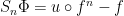

5.2. Time change

Let  be a smooth time change, which is a cocycle with respect to a given smooth flow

be a smooth time change, which is a cocycle with respect to a given smooth flow  , i.e.

, i.e.

We are interested in the flow  defined by

defined by

The generator of  is

is

It is a smooth nonnegative function on  . We assume that

. We assume that  . We denote

. We denote  by

by  .

.

Remark. If  is generated by a smooth vectorfield

is generated by a smooth vectorfield  , then

, then  is generated by the vectorfield

is generated by the vectorfield  .

.

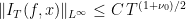

Theorem 6 (Forni-Ulcigrai) Let  be the horocyclic flow of a compact constant curvature surface. For any smooth function

be the horocyclic flow of a compact constant curvature surface. For any smooth function  , the flow

, the flow  is mixing, with quantitative estimates which imply that the spectrum is absolutely continuous with respect to Lebesgue measure.

is mixing, with quantitative estimates which imply that the spectrum is absolutely continuous with respect to Lebesgue measure.

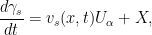

Lemma 7 Let  denote the generator of

denote the generator of  and

and  the generator of

the generator of  . Take a segment of

. Take a segment of  -orbit

-orbit

Let

Let  Then

Then  where

where

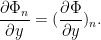

Remark.  , hence

, hence  . Thus, as

. Thus, as  tends to

tends to  ,

,  tends to a finite limit, the shear rate.

tends to a finite limit, the shear rate.

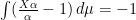

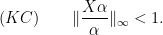

Proof of Lemma. It relies on ![{[U,X]=U}](https://s0.wp.com/latex.php?latex=%7B%5BU%2CX%5D%3DU%7D&bg=ffffff&fg=000000&s=0&c=20201002) , which implies that

, which implies that ![{[U_\alpha,X]=(\frac{X\alpha}{\alpha}-1)U_\alpha}](https://s0.wp.com/latex.php?latex=%7B%5BU_%5Calpha%2CX%5D%3D%28%5Cfrac%7BX%5Calpha%7D%7B%5Calpha%7D-1%29U_%5Calpha%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

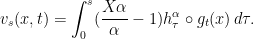

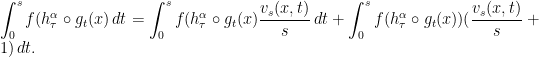

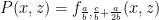

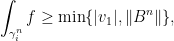

We see that we need compute integrals over sheared arcs  . Let

. Let  be a smooth function. Then

be a smooth function. Then

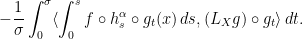

The second term is an ergodic integral which is easy to handle. The main term is the first term, which can be rewritten

where  ,

,  .

.

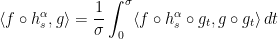

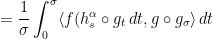

To deduce mixing, one must estimate  inner products

inner products  . We integrate by parts

. We integrate by parts

The second term is again an ergodic integral that tends to  .

.

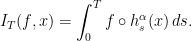

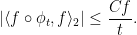

5.3. Quantitative equidistribution estimates

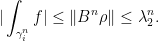

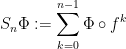

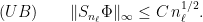

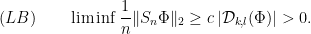

We are interested in ergodic integrals of the form

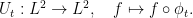

Flaminio-Forni treat the un-time-changed case  and show that

and show that

for some  . One can adapt their arguments, using estimates by Bufetov-Forni, to the time-changed case, and get similar estimates.

. One can adapt their arguments, using estimates by Bufetov-Forni, to the time-changed case, and get similar estimates.

5.4. Additional references

Marcus original technique already proved mixing. His setting was Anosov flows, with their stable and unstable foliations. From these, a flow  can be defined, which satisfies

can be defined, which satisfies

where $latex {s^*}&fg=000000$ has a continuous mixed partial second derivative $latex {\frac{\partial s^*}{\partial t \partial s}}&fg=000000$. So we see that time-changes were already in the picture.

Kushnirenko was able to prove mixing for smooth time-changes, assuming

Thus small time-changes are mixing. What about larger ones? This is still open.

Tiedra de Aldecoa uses a different method to prove absolute continuity of the spectrum for time changes satisfying (KC).

Generalizations. The setting is algebraic dynamics: a unipotent  -parameter subgroup acting on

-parameter subgroup acting on  ,

,  semisimple Lie group.

semisimple Lie group.

Lucia Simonelli (Forni’s student) could prove absolute continuity of the spectrum for time changes satisfying (KC).

Davide Ravotti (my student) could prove quantitative mixing.

Kanigowski and Ravotti could prove quantitative  -mixing.

-mixing.

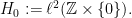

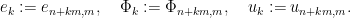

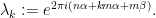

6. Heisenberg nilfows

Let  denote the Lie group of unipotent

denote the Lie group of unipotent  matrices, with

matrices, with  as standard generators of its Lie algebra,

as standard generators of its Lie algebra, ![{Z=[X,Y]}](https://s0.wp.com/latex.php?latex=%7BZ%3D%5BX%2CY%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) . Let

. Let  be a discrete cocompact lattice (for instance, unipotent matrices with integer entries). We call

be a discrete cocompact lattice (for instance, unipotent matrices with integer entries). We call  the Heisenberg nilmanifold.

the Heisenberg nilmanifold.  acts on

acts on  by right multiplication. The action of a

by right multiplication. The action of a  -parameter subgroup is called a nilflow.

-parameter subgroup is called a nilflow.

6.1. Classical results

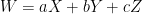

Auslander-Green-Hahn (1963) studied unique ergodicity of nilflows. They showed that is  can be written

can be written  , for the nilflow defined by

, for the nilflow defined by  ,

,

unique ergodicity  ergodicity

ergodicity  minimality

minimality

and

and  are rationally independent.

are rationally independent.

Rational independence means that no linear relation with nonzero integral coefficients  can hold.

can hold.

In other words, if we project the situation to the  -torus

-torus  where

where ![{\bar H=H/[H,H]}](https://s0.wp.com/latex.php?latex=%7B%5Cbar+H%3DH%2F%5BH%2CH%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) ,

,  , then the flow

, then the flow  projects to a flow

projects to a flow  on

on  , and

, and

unique ergodicity for

unique ergodicity for

unique ergodicity for  .

.

Here, we have used a theorem of Furstenberg on skew-products of rotations of the circle.

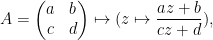

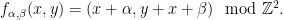

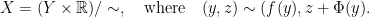

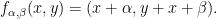

Definition 8 For real numbers  , let

, let  be the diffeomorphism of the

be the diffeomorphism of the  -torus defined by

-torus defined by

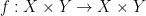

In general, a skew-product over a map  is a map

is a map  which is fiber-preserving (with respect to the projection

which is fiber-preserving (with respect to the projection  ) and the permutation of fibers is given by

) and the permutation of fibers is given by  . In the example at hand,

. In the example at hand,  is isometric on fibers.

is isometric on fibers.

6.2. First return map

Lemma 9 Assume that the Heisenberg nilflow  is uniquely ergodic. There is a transverse submanifold

is uniquely ergodic. There is a transverse submanifold  , diffeomorphic to a torus, such that the Poincaré return map

, diffeomorphic to a torus, such that the Poincaré return map  , given by

, given by  ,

,  the first return time to

the first return time to  , is one of the Furstenberg skew-products

, is one of the Furstenberg skew-products  .

.

Proof of the Lemma.  lifts to a vertical plane

lifts to a vertical plane  in

in  . Since

. Since  and

and  commute,

commute,  is diffeomorphic to a torus. If

is diffeomorphic to a torus. If  is uniquely ergodic,

is uniquely ergodic,  , so

, so  is transverse to the flow.

is transverse to the flow.

We show that  is a return time. We use the fact that

is a return time. We use the fact that  . So using the Campbell-Hausdorff-Dynkin formula, we compute

. So using the Campbell-Hausdorff-Dynkin formula, we compute

This point belongs to  , so

, so  .

.

6.3. Lack of mixing

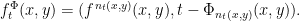

The above Lemma shows th at we can now focus on Furstenberg skew-products. We shall see that the parameter  plays no role, so we focus on

plays no role, so we focus on  .

.

Definition 10 Given a map  and a function

and a function  (called the roof function), we define the special flow

(called the roof function), we define the special flow  over

over  under

under  as follows: it is a flow on an

as follows: it is a flow on an  -bundle

-bundle  over the circle, the quotient of the vertical unit speed flow on

over the circle, the quotient of the vertical unit speed flow on  under the identification

under the identification

Fact. If a flow  admits a global Poincaré section

admits a global Poincaré section  with first return time

with first return time  , then

, then  is isomorphic to the special flow of the first return map with roof function

is isomorphic to the special flow of the first return map with roof function  .

.

In the case at hand, the roof function is constant. Therefore, the special flow is not mixing: if  ,

,  a short interval, so do its images by the vertical unit speed flow, and so do their projections to

a short interval, so do its images by the vertical unit speed flow, and so do their projections to  , which are the images of a set

, which are the images of a set  and its images by the special flow.

and its images by the special flow.

This shows that Heisenberg nilflows are never mixing.

7. Mixing time-changes

The following contents can be found in Avila-Forni-Ulcigrai. A recent generalization to all step 2 nilflows can be found in Avila-Forni-Ravotti-Ulcigrai. Ravotti has treated filiform nilflows.

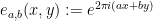

Let  be a Heisenberg nilflow and

be a Heisenberg nilflow and  a smooth positive function on

a smooth positive function on  . Let

. Let

Then the time-change is given by

7.1. Time-changes versus special flows

We have seen that Heisenberg nilflows  are special flows with constant roof function. The time-change

are special flows with constant roof function. The time-change  is again a special flow, over the same skew-product, but with roof function

is again a special flow, over the same skew-product, but with roof function

7.2. Trivial time-changes

Beware that there exist smooth time-changes which are trivial, i.e. smoothly conjugate to the original nilflow.

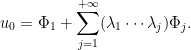

In general, adding a coboundary  to the roof function of a special flow produces an isomorphic flow. This leads us to the following problem: understand cohomology of nilflows. Here are our ultimate results.

to the roof function of a special flow produces an isomorphic flow. This leads us to the following problem: understand cohomology of nilflows. Here are our ultimate results.

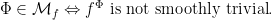

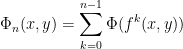

Theorem 11 Let  be a There exists a dense set

be a There exists a dense set  in

in  of roof functions, and a vectorspace

of roof functions, and a vectorspace  of countable dimension and codimension, such that if a roof function

of countable dimension and codimension, such that if a roof function  is chosen in

is chosen in  , the corresponding special flow

, the corresponding special flow  is mixing.

is mixing.

Moreover, for  ,

,

In fact, Katok has found a nice characterization of which  are smoothly trivial. I will come back to this next week. Today, I merely give one example.

are smoothly trivial. I will come back to this next week. Today, I merely give one example.

Example.  is a smoothly trivial roof function.

is a smoothly trivial roof function.

Under the assumption that  has bounded type, Kanigowski and Forni have proved quantitative mixing.

has bounded type, Kanigowski and Forni have proved quantitative mixing.

7.3. Idea of proof

We start from a Furstenberg skew-product  and play with roof functions. We use again mixing by shearing. We consider intervals in the fiber (i.e. in the

and play with roof functions. We use again mixing by shearing. We consider intervals in the fiber (i.e. in the  direction). We shall see that many of them shear in the flow (

direction). We shall see that many of them shear in the flow ( ) direction. But there are intervals which do not shear or shear in the other direction.

) direction. But there are intervals which do not shear or shear in the other direction.

7.4. Special flow dynamics

Given a point  in the torus and

in the torus and  , we compute

, we compute  . When

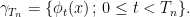

. When  is large, we join bottom to roof several times. Let

is large, we join bottom to roof several times. Let

and

Then

In order to exhibit shearing, we want to see how this changes in  . Since

. Since  is an isometry in the

is an isometry in the  -direction, the

-direction, the  -derivative of the sum

-derivative of the sum  is the sum of

is the sum of  -derivatives, i.e.

-derivatives, i.e.

Take  trigonometric polynomials on the torus, which are positive.

trigonometric polynomials on the torus, which are positive.

Given  , let

, let  minus its average on the

minus its average on the  -fiber. Define

-fiber. Define  as the set of

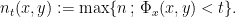

as the set of  such that

such that  is not a measurable coboundary.

is not a measurable coboundary.

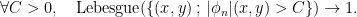

Step 1. Since  is not a measurable coboundary, the sums

is not a measurable coboundary, the sums  must grow,

must grow,

This relies on a result by Gottschalk-Hedlund, plus decoupling.

Step 2. The sums  are trigonometric polynomials of bounded degree. It follows that

are trigonometric polynomials of bounded degree. It follows that

There can be flat intervals where no stretch occur, so one must throw them away. But the larger  , the shorter these intervals are.

, the shorter these intervals are.

Step 3. We use a polynomial bound on level sets of trigonometric polynomials.

The next class will deal with renormalization, deviations of ergodic averages. Only later shall we get back to Katok’s characterization of smoothly trivial special flows and to nilflows.

8. Short recap

Parabolicity is (a bit heuristically) defined by slow butterfly effect. This can be formalized for smooth flows, in terms of growth of derivatives under iteration. It is not that easy to build examples.

Presently, parabolic flows is the following list of examples,

- Horocycle flows of compact constant curvature surfaces.

- Unipotent flows (a generalization of the above).

- Nilflows and their time-changes.

- Smooth area-preserving flows.

- Linear flows on flat surfaces with conical singularites.

The two first are uniformly parabolic. The next is partially parabolic. The fourth is nonuniformly parabolic, the last is elliptic with singularities.

We have proven mixing via the technique of shearing.

Here is a further example where this technique works, due to B. Fayad, of an elliptic flavour. Start with a linear flow on the  -torus. If

-torus. If  , Fayad has been able to construct anaytic time-changes which are mixing.

, Fayad has been able to construct anaytic time-changes which are mixing.

Such examples are very rare (they rely on parameters being very Liouville numbers).

9. Renormalization

The word here is taken in a meaning which differs from its use in holomorphic dynamics (not to speak of quantum mechanics).

The idea is to analyze systems which are approximately self-similar, and exhibit several time scales.

We introduce the renormalization flow  which rescales: long trajectories become short. Given a map

which rescales: long trajectories become short. Given a map  , one way to zoom in is to restrict

, one way to zoom in is to restrict  to a subspace

to a subspace  and replace

and replace  with the first return map to

with the first return map to  . Eventually rescale space afterwards. But there are other means.

. Eventually rescale space afterwards. But there are other means.

9.1. A series of examples

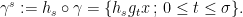

Example. Start with the horocycle flow  . The key relation is

. The key relation is

Applying the geodesic flow to a length  trajectory

trajectory  , we get a trajectory of length

, we get a trajectory of length  . So the geodesic flow achieves renormalization, on the same space, with no effort.

. So the geodesic flow achieves renormalization, on the same space, with no effort.

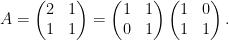

Example. The cat map  associated with the matrix

associated with the matrix  on the

on the  -torus. Let

-torus. Let  ,

,  denote the eigenvalues,

denote the eigenvalues,  the eigenvectors. Let

the eigenvectors. Let  be the linear flow in direction

be the linear flow in direction  , with unit speed. This is an elliptic flow.

, with unit speed. This is an elliptic flow.

Put  . Let

. Let

Put  . Then

. Then  maps

maps  to a trajectory of

to a trajectory of  of length

of length  .

.

Express  as the composition of two Dehn twists,

as the composition of two Dehn twists,

Rotate coordinates so that eigenvector  becomes vertical. Then

becomes vertical. Then  acts by a diagonal matrix in

acts by a diagonal matrix in  , which was denoted by

, which was denoted by  ,

,  earlier.

earlier.

Example. Higher genus toy model.

View a genus  surface

surface  as a regular octagon in the Euclidean plane with edge identifications. Fix a direction

as a regular octagon in the Euclidean plane with edge identifications. Fix a direction  , consider the linear flow in direction

, consider the linear flow in direction  . It descends to a flow

. It descends to a flow  on the surface which is not well-defined at the vertex

on the surface which is not well-defined at the vertex

This generalizes to translation surfaces, made of a disjoint union of polygons, with identifications given by translations. The notion of a direction  is well-defined on the surface (except at finally many singularities), whence a flow

is well-defined on the surface (except at finally many singularities), whence a flow  with singularities.

with singularities.

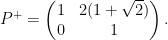

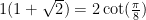

Consider matrix

It applies a shear on the original regular octagon. Since  , This linear map induces a homeomorphism of the surface

, This linear map induces a homeomorphism of the surface  . Let us repeat in different direction (rotation by 45 degrees), get matrix

. Let us repeat in different direction (rotation by 45 degrees), get matrix  . Let

. Let  , this is a hyperbolic matrix with eigenvalues

, this is a hyperbolic matrix with eigenvalues  . We get again an affine automorphism

. We get again an affine automorphism  of the surface. Let

of the surface. Let  denote the linear flow in the direction of the eigenvector

denote the linear flow in the direction of the eigenvector  . Then

. Then

B. Veech has shown that the affine group of the surface is generated by  and the order

and the order  rotation. This is as large as the affine group of a translation surface can be. Therefore,

rotation. This is as large as the affine group of a translation surface can be. Therefore,  is clled the Veech surface.

is clled the Veech surface.

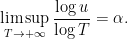

For almost every direction  (the condition is that

(the condition is that  ), there exists a sequence of hyperbolic automorphisms

), there exists a sequence of hyperbolic automorphisms  with unstable direction

with unstable direction  that converge to

that converge to  .

.  can be used to renormalize

can be used to renormalize  .

.

Example.

For a slightly deformed octagon  , the affine group of the corresponding surface

, the affine group of the corresponding surface  is trivial. Nevertheless, almost every linear flow

is trivial. Nevertheless, almost every linear flow  is still renormalizable: there is a sequence of surfaces

is still renormalizable: there is a sequence of surfaces  and affine hyperbolic morphisms

and affine hyperbolic morphisms  with expanding direction

with expanding direction  converging to

converging to  .

.

Indeed, consider the space  of linear flows on translation surfaces of genus

of linear flows on translation surfaces of genus  . The flow of diagonal matrices acts on this space, generating a flow

. The flow of diagonal matrices acts on this space, generating a flow  on

on  , known as the Teichmüller flow.

, known as the Teichmüller flow.

Theorem 12 (Masur-Veech)  is recurrent.

is recurrent.

Therefore for almost every linear flow, there exists a sequance  such that

such that  tends to

tends to  .

.

9.2. What is renormalization good for?

It is used to put diophantine conditions on linear flows in higher genus. See my ICM 2022 talk (watch it on line on july 11th). I do not pursue this topic further.

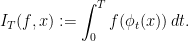

It is used to study deviations of ergodic averages. Assume a flow  is uniquely ergodic. Ergodic integrals take the form

is uniquely ergodic. Ergodic integrals take the form

By the ergodic theorem,  for every

for every  . Let us focus on our favourite example, the Veech surface. The invariant measure is area in the plane. Unique ergodicity is a theorem of Masur, Kerckhoff-Masur-Smillie

. Let us focus on our favourite example, the Veech surface. The invariant measure is area in the plane. Unique ergodicity is a theorem of Masur, Kerckhoff-Masur-Smillie

We show that for functions with vanishing average,

for some  (discovered by Zorich, conjectured by Kontsevitch-Zorich, proven by Forni).

(discovered by Zorich, conjectured by Kontsevitch-Zorich, proven by Forni).

Fix a basis  of

of  . Fix a section

. Fix a section  of the linear flow. Take trajectories

of the linear flow. Take trajectories  from

from  and to their first return to

and to their first return to  . Closing them by segments of sigma gives representatives of the basis of homology. Let

. Closing them by segments of sigma gives representatives of the basis of homology. Let

Let  be the matrix expressing the homology basis

be the matrix expressing the homology basis  is the initial basis. Let

is the initial basis. Let  denote the eigenvalues of

denote the eigenvalues of  . By continuity of

. By continuity of  , up to a small error,

, up to a small error,

Let us start from a large  instead of

instead of  ,

,

For simplicity, let us assume that  is the eigenvector

is the eigenvector  . Then

. Then

a contradiction. Therefore  must be a combination of

must be a combination of  . Thus

. Thus

On the other hand,  grows like

grows like  . Let

. Let  satisfy

satisfy

Then  , as announced.

, as announced.

Of course, I cheated a bit, some more regularity of  is needed.

is needed.

In that example, the fact that  can be explicitly. Tomorrow, I will mention results on this for general translation surfaces.

can be explicitly. Tomorrow, I will mention results on this for general translation surfaces.

And then, back to nilflows.

9.3. Area preserving flows on surfaces

compact connected oriented surface of genus

compact connected oriented surface of genus  . Let

. Let  be a flow which preserves a smooth measure

be a flow which preserves a smooth measure  . Note that

. Note that  has fixed points, which are all of saddle type. In the next theorem, the number and types of fixed points are fixed.

has fixed points, which are all of saddle type. In the next theorem, the number and types of fixed points are fixed.

Theorem 13 (Zorich, Forni, Avila-Viana) There exists  distinct positive exponents

distinct positive exponents  such that almost every choice of

such that almost every choice of  (Katok’s fundamental class, described in terms of periods) is uniquely ergodic, and for all smooth functions

(Katok’s fundamental class, described in terms of periods) is uniquely ergodic, and for all smooth functions  ,

,

for all

for all  .

.

In this statement,  means a function

means a function  such that

such that

Furthermore,  is a distribution on

is a distribution on  , and

, and  .

.

This type of behavior is called a power deviation spectrum. It was conjectured by Kontsevitch and Zorich, then proved by Zorich in 1997 for special functions  , with only one term

, with only one term  . Then Forni obtained a proof in 2002 for functions with support away from fixed points, up to distinctness of exponents which was proven by Avila-Viana. Bufetov gave a more precise version, transforming the result into an asymptotic expansion. With Fraczek, we gave a different proof based on Marmi-Moussa-Yoccoz). Finally, Fraczek-Kim could handle generic saddles. The expansion then involves extra terms depending on saddles (and not on

. Then Forni obtained a proof in 2002 for functions with support away from fixed points, up to distinctness of exponents which was proven by Avila-Viana. Bufetov gave a more precise version, transforming the result into an asymptotic expansion. With Fraczek, we gave a different proof based on Marmi-Moussa-Yoccoz). Finally, Fraczek-Kim could handle generic saddles. The expansion then involves extra terms depending on saddles (and not on  ).

).

9.4. Idea of proof

Choose coordinates such that  appears as a time-change of a linear flow on a translation surface. The time change is smooth only away from fixed points. If function

appears as a time-change of a linear flow on a translation surface. The time change is smooth only away from fixed points. If function  has support away from fixed points, one is reduced to study deviation for linear flows. For a general linear flow, a renormalization is given by a sequence of matrices

has support away from fixed points, one is reduced to study deviation for linear flows. For a general linear flow, a renormalization is given by a sequence of matrices  , the Kontsevitch-Zorich cocycle. Eigenvalues are replaced with ratios of Lyapunov exponents.

, the Kontsevitch-Zorich cocycle. Eigenvalues are replaced with ratios of Lyapunov exponents.

10. More on renormalization

10.1. Back to nilflows

Let  denote the stabilizer of vector

denote the stabilizer of vector  is

is  . Defined

. Defined  by

by

Then  is recurrent, its has been used as a renormalization by Flaminio-Forni, in order to prove polynomial deviations of ergodic averages.

is recurrent, its has been used as a renormalization by Flaminio-Forni, in order to prove polynomial deviations of ergodic averages.

10.2. Renormalizable parabolic flows

Horocycle flows: yes.

Unipotent flows: unknown.

Heisenberg nilflows: yes.

Higher step nilflows: unknown in general. Special case (special flows over skew products) studied by Flaminio-Forni. In that case, the maps  diverge.

diverge.

Linear flows over higher genus surfaces (they are smooth and area-preserving).

More general smooth flows on surfaces (not necessarily area preserving). Then  typically diverges. This is related to generalized interval exchange transformations.

typically diverges. This is related to generalized interval exchange transformations.

11. Isomorphisms between time-changes

Recall that a time-change  of a flow

of a flow  is

is

When do such a change lead to a genuinely different, nonisomorphic flow?

11.1. Setting of special flows

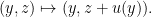

Let  be a map. Given a roof function $latex {\Phi:Y\rightarrow{\mathbb R}_{>0}}&fg=000000$, the flow of translations on

be a map. Given a roof function $latex {\Phi:Y\rightarrow{\mathbb R}_{>0}}&fg=000000$, the flow of translations on  descends to a flow $latex {\psi^{f,\Phi}_{\mathbb R}}&fg=000000$ on the quotient space

descends to a flow $latex {\psi^{f,\Phi}_{\mathbb R}}&fg=000000$ on the quotient space

Lemma 14 Let  be special flows over the same map

be special flows over the same map  , under roofs

, under roofs  and

and  . If there exists a function

. If there exists a function  such that

such that

then the two special flows are isomorphic.

then the two special flows are isomorphic.

Indeed, look for a conjugating homeomorphism of the form

It commutes with translations and maps one equivalence relation to the other.

11.2. Cohomological equations

The operator  is called the coboundary operator. Hence the equation

is called the coboundary operator. Hence the equation  with unknown

with unknown  is called a cohomological equation. It sometimes appears with a twist:

is called a cohomological equation. It sometimes appears with a twist:  , for some

, for some  .

.

There are obvious obstructions.

If  is a periodic point, i.e.

is a periodic point, i.e.  , then

, then

So every periodic orbit gives an obstruction. If  is hyperbolic, it is essentially the only one.

is hyperbolic, it is essentially the only one.

More generally, if  is an invariant measure, then

is an invariant measure, then

So every invariant measure gives an obstruction.

11.3. An elliptic example

If  is elliptic, this is sometimes sufficient, e.g. for circle rotations

is elliptic, this is sometimes sufficient, e.g. for circle rotations  : if

: if  is a trigonometric polynomial and

is a trigonometric polynomial and  , then there exists a solution

, then there exists a solution  . If

. If  is smooth, a Diophantine condition on

is smooth, a Diophantine condition on  is required in addition for the solution

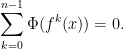

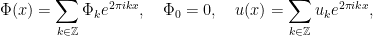

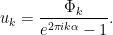

is required in addition for the solution  to be smooth. Indeed, in Fourier, if

to be smooth. Indeed, in Fourier, if

then

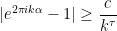

For  to decay superpolynomially, one needs that

to decay superpolynomially, one needs that

for some  , which amounts to

, which amounts to  being badly approximable by rationals.

being badly approximable by rationals.

11.4. Parabolic case

In the parabolic world, in addition to invariant measures, invariant distributions provide further obstructions.

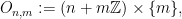

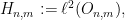

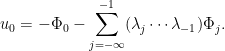

Remember that Heisenberg nilflows are special flows with constant roof over Furstenberg skew-products of the form

We need study the corresponding cohomological equation.

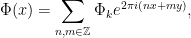

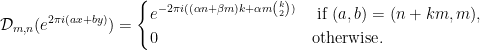

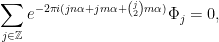

Proposition 15 In Fourier series, if

then the cohomological equation has a formal solution if and only if all

then the cohomological equation has a formal solution if and only if all  where

where  is the distribution such that

is the distribution such that

Indeed, let  , and

, and

Then

The matrix  acts on

acts on  . It has a family of orbits

. It has a family of orbits  and

and  orbits

orbits

, for each

, for each  .

.  splits accordingly, so the cohomological equation can be solved independently in each summand

splits accordingly, so the cohomological equation can be solved independently in each summand

and on

For  ,

,  acts like the circle rotation

acts like the circle rotation  , so we already understand the necessary condition, given by invariant measures.

, so we already understand the necessary condition, given by invariant measures.

For fixed  and

and  , let us denote

, let us denote

The cohomological equation reads

where

Recursively, one gets

Then

Similarly, the equation

yields

Combining both leads to

i.e.  .

.

Conversely, one can see that this conditions are sufficient for existence of a formal solution, and for existence of smooth solutions under Diophantine conditions.

11.5. More general results on cohomological equations in parabolic dynamics

- The case of Heisenberg nilflows, which we just treated, is due to Katok.

- More general nilflows have been studied by Flaminio-Forni, as well as horocycle flows.

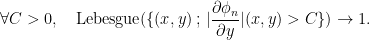

- Linear flows on higher genus surfaces are due to Forni. In this case, one gets

distributions

distributions  . If a smooth function

. If a smooth function  is killed by all of them, ergodic integrals stay bounded. This is equivalent to

is killed by all of them, ergodic integrals stay bounded. This is equivalent to  being a coboundary, according to Gottschalk-Hedlund.

being a coboundary, according to Gottschalk-Hedlund.

11.6. Cocycle effectiveness

Sometimes, the cohomological equation with measurable data is needed. It is much more difficult, but some miracle occurs in the parabolic setting.

Definition 16 Given a map  , say a function

, say a function  on

on  is a measurable coboundary of the exists a measurable

is a measurable coboundary of the exists a measurable  such that

such that  .

.

The theorem on mixing smooth time-changes of Heisenberg nilflows (Avila-Forni-Ulcigrai) required the roof not to be a measurable coboundary. It turns out that here, this is equivalent to not being a smooth coboundary.

Proposition 17 Let us study the Furstenberg skew-product  on the

on the  -torus. Let

-torus. Let  be a smooth function on

be a smooth function on  . Then

. Then

is not a smooth coboundary

is not a smooth coboundary

is not a measurable coboundary.

is not a measurable coboundary.

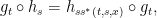

Indeed, let

denote the Birkhoff sums. Then Flaminio-Forni establish quadratic upper bounds: there exists a sequence  such that

such that

Matching lower bounds exist: if  is not a smooth coboundary, there exists a nonvanishing

is not a smooth coboundary, there exists a nonvanishing  , and

, and

If  is a measurable coboundary,

is a measurable coboundary,  , then

, then

stays bounded on a set of almost full measure, and grows at most quadratically on the complement. This contradicts the quadratic lower bound.

11.7. More general nilflows

Theorem 18 (Avila-Forni-Ravotti-Ulcigrai) For general nilflows of step  , there exists a dense (in

, there exists a dense (in  ) class

) class  of generators

of generators  of time-changes, which are

of time-changes, which are

- either measurably trivial (i.e. measurably conjugate to the nilflow);

- or mixing.

Unfortunately, the set  is not explicitly describable like in the Heisenberg case.

is not explicitly describable like in the Heisenberg case.

The proof is an induction on central extensions. It uses mixing by shearing.

12. More examples of parabolic dynamics

12.1. Parabolic perturbations which are not time-changes

These were discovered by Ravotti during his PhD at Princeton.

Here, parabolic means that the derivative of the flow grows polynomially. It implies that smooth time-changes are still parabolic.

Start with  ,

,  a cocompact lattice,

a cocompact lattice,  . Let

. Let  be the flow generated by a unipotent element

be the flow generated by a unipotent element  . Let

. Let  belong to the center of the minimal unipotent and

belong to the center of the minimal unipotent and  such that

such that ![{[U,V]=-cZ}](https://s0.wp.com/latex.php?latex=%7B%5BU%2CV%5D%3D-cZ%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

Let  where

where  is a function on

is a function on  such that

such that  . Let

. Let  denote the corresponding flow.

denote the corresponding flow.

Theorem 19 If  preserves a smooth measure

preserves a smooth measure  with

with  density, then

density, then  is

is

- parabolic:

;

; - ergodic;

- mixing.

Remark. Existence of

there exists a time-change of the

there exists a time-change of the  -flow which commutes with

-flow which commutes with  .

.

Remark. There exists such  which are not smoothly isomorphic to

which are not smoothly isomorphic to  . This follows from the failure of cocycle rigidity for parabolic actions, due to Wang, following many people.

. This follows from the failure of cocycle rigidity for parabolic actions, due to Wang, following many people.

Remark. Ergodicity needs be proven, it does not follow from general principles.

The proof relies on mixing by shearing, although in a setting different from what we have already met. Consider arcs of orbits of  and push them by

and push them by  . Since

. Since

where  is an ergodic integral for

is an ergodic integral for  .

.

12.2. What else can shearing be used for?

A strong, quantitative, shearing can be used to establish spectral results. Here, I mean the spectrum of the Koopman operator

To each  , there corresponds a spectral measure

, there corresponds a spectral measure  on

on  . Its Fourier coefficients are given by selfcorrelations of

. Its Fourier coefficients are given by selfcorrelations of  , i.e.

, i.e.

The spectrum of  is absolutely continuous

is absolutely continuous  for all

for all  ,

,  is absolutely continuous

is absolutely continuous  for all

for all  ,

,  .

.

If  is ergodic, it is enough to study functions

is ergodic, it is enough to study functions  which are smooth coboundaries.

which are smooth coboundaries.

Theorem 20 (Forni-Ulcigrai) Smooth time-changes of a horocycle flow has absolutely continuous spectrum.

The proof uses quantitative bounds

Since  , this implies absolute continuity.

, this implies absolute continuity.

Fayad-Forni-Kanigowski consider smooth area-preserving flows on the  -torus with a stopping point.

-torus with a stopping point.

12.3. Ratner property

M. Ratner uses a quantitative form of shearing for unipotent flows.

Shearing takes some time. Ratner requires the following:

For all  , for all large enough

, for all large enough  , there exists a set

, there exists a set  of measure

of measure  , for all pairs

, for all pairs  not in the same orbit, but such that

not in the same orbit, but such that  , there exists

, there exists  such that

such that

and

for all ![{\tau\in [t_1,(1+K)t_1]}](https://s0.wp.com/latex.php?latex=%7B%5Ctau%5Cin+%5Bt_1%2C%281%2BK%29t_1%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

For a long time, this was used only in algebraic dynamics, until a more flexible variant, called switchability, was introduced. It means that one can switch past and future.

Fayad-Kanigowski and Kanigowski-Kulaga-Ulcigrai established this variant for typical smooth area-preserving flows on surfaces. This implies mixing of all orders.

Here is another application if these ideas:

Theorem 21 (Kanigowski-Lemanczyk-Ulcigrai) For all smooth time-changes  of the horocycle flow, the rescaled flow

of the horocycle flow, the rescaled flow  is not isomorphic to

is not isomorphic to  .

.

They are actually disjoint in Furstenberg’s sense. Recall that the horocycle flow itself is isomorphic to its rescalings.

We use a disjointness criterion based on this switchable variant of Ratner’s property.

12.4. Summary

- Parabolic means slow butterfly effect.

- Typically slow mixing.

- Slow equidistribution.

- Disjointness of rescalings.

- Obstructions to cohomological equation.

Tools:

- Shearing.

- Ratner property and switchability.

- Renormalization.

a positive integer,

an integer. There exist infinitely many prime numbers

such that

if and only if

.

.

of primes are evenly distributed.

is a Dirichlet character mod

if

for all

,

for all

,

.

-function

, the trivial character, then

is entire. Dirichlet’s theorem follows from

. The prime number theorem on arithmetic progressions follows from

for all

and on a neighborhood of that line. Improving the remainder to be polynomial of degree

is

amounts to nonvanishing of

on

. This is hard. The special case

is known as Generalized Riemann Hypothesis.

has a probability to be prime which is

, the expected error from this random model is

.

-functions are statistical: what happens on the average over

?

,

or

,

.

denote a field with

elements,

denotes the ring of polynomials with coefficients in

. I intend to replace integers with

. For instance, the Euclidean algorithm works on polynomials.

the set of monic polynomials (thought of as an analogue of positive integers).

, its absolute value is

, which is equal to the cardinality of the quotient ring

.

is a Dirichlet character mod

if

for all

,

for all

,

.

-function is

.

is entire for

, because it is a polynomial in

. Indeed,

vanishes for

, since each residue class mod

occurs

times and, by orthogonality of characters,

.

for

. If we can improve nonvanishing we get information on the number of irreducible

such that

and

.

for

.

. Katz, Katz-Sarnak, Deligne answered statistical questions in the limit where

tends to infinity.

is primitive if there is no character

of smaller modulus

such that

for all

with

.

is odd if

for some

.

is primitive and odd. Then

is a polynomial in

of degree exactly

with all roots on

. It follows that

is the characteristic polynomial of

where

is a unitary matrix.

is squarefree and

, there a exists a map

equidistribute in the unitary group as

tends to infinity. It kills hope to control its nonvanishing simultaneously for all

.

be squarefree of degree

. Then

.

is large enough.

. Since the middle of XXth century, one knows that such a counting can follow from the same topological techniques used to describe solutions over the complex numbers.

,

involving the number of

such that

and

.

-tuples of natural numbers summing to

of sets, each of which is the set of solutions to

equations in

variables, over

(the unknowns are the coefficients of the monic polynomials

).

be a scheme of finite type over

. Then the number of points of

over

is

on

are algebraic integers of absolue value

.

times the dimension of cohomology. This allows to conclude

be a monic squarefree polynomial of degree

over a field

. Let

be prime to

. Consider the union of the schemes parametrizing the set of

whose product equals

. Then the cohomology of the points over

splits as the sum of a boring piece (independent on

) and an interesting piece which is

-dimensional if

, and otherwise its dimension is at most